Missing Character Prediction in Words with BiLSTM and Attention

In this article, we explore how to predict missing characters in words using a BiLSTM with Attention, focusing on data creation and tuning.

Introduction

Let’s say we are given a dictionary containing 250k words of different lengths and we are asked to create a deep learning model which can perform character prediction in an unseen word.

Example Test Cases:

Ex. - 1

Word: Application

Word with missing characters: A__lic_tion

Prediction of missing character: either of p & a

Ex. — 2

Word: exit

Word with missing characters: _x__

Prediction of missing character: either of e,i, t

Ex. — 3

Word: Earth

Word with missing characters: _arth

Prediction of missing character: e

As we can see above, test cases are very tricky, even for the human mind to guess the characters when the number of missing characters is large. This requires our model to be very robust and have the ability to predict missing characters for cases where multiple characters are missing as well.

Problem statement

Let us now try to structure the problem. We are given a word with missing characters. We should be getting an array with probabilities for each character in vocabulary as an output of our model and choose the most probable one. If it's a correct prediction i.e. character is present in the word — we can apply model again on the word after filling the predicted character to predict the rest of the characters and so on. If it's incorrect then choose the next character in the sorted list of probabilities.

Example:

Case 1

E__m >> [x,a,…..]

Ex_m >> [a,c,…..]

>> Exam

Case 2

E__m >>[c,x,a ..] (we chose item with second highest probability)

Ex_m >> [a,c…]

Generating Training Data

We are provided with a list of words defined as full dictionary. Using this dictionary we will first creating words with missing characters (input) and missing characters as target variables (output).

Note, how we remove additional characters in a word to create more samples while predicting the same target character. See example below — first sample is created by removing “a” and second sample is generated by removing “x” as well in the same training sample. This ensures robustness of our model in case we get words with multiple characters missing.

sample 1 — Ex_m >>> a

sample 2 — E__m >>>> a

We need to ensure that our input array is of the same length so we determine maximum length of words in dictionary and assign that as the array size. We then utilize padding and char to index mapping to convert the inputs to input and output array of the same sizes for each training data instance.

Finally, we split the data into three groups:

- Training set (70%)

- Validation set (15%)

- Test set (15%)

import random

import numpy as np

from tensorflow.keras.preprocessing.sequence import pad_sequences

from sklearn.model_selection import train_test_split

# Set seed for reproducibility

random.seed(40)

# Sample data for demonstration

words = full_dictionary # Assuming `full_dictionary` is defined elsewhere

def generate_training_data_3(words):

"""

Generate training data by creating samples with missing characters.

Args:

- words (list): List of words from which training data is generated

Returns:

- X (list): List of input sequences with missing characters

- y (list): List of corresponding target characters

"""

X = []

y = []

for word in words:

for char in np.unique(list(word)):

# Input with one missing character

X.append(word.replace(char, "_"))

y.append(char)

try:

# Input with two missing characters (one of them randomly chosen)

missing_char = random.choice(list(word.replace(char, "")))

X.append(word.replace(char, "_").replace(missing_char, "_"))

y.append(char)

except IndexError:

pass # Ignore if list is empty (should not happen with valid inputs)

return X, y

# Toy dataset

X_train_3, y_train_3 = generate_training_data_3(words)

words_3 = X_train_3

labels_3 = y_train_3

# Determine the maximum length of words in the dataset

max_length_3 = max(len(word) for word in words_3)

# Create a dictionary for character to index mapping

char_to_index = {char: idx for idx, char in enumerate(set(''.join(words_3)))}

index_to_char = {idx: char for char, idx in char_to_index.items()}

# Convert words and labels to numerical format using character mapping

X_padded_3 = pad_sequences([[char_to_index[char] for char in word] for word in words_3],

maxlen=max_length_3, padding='post')

y_padded_3 = np.array([char_to_index[label] for label in labels_3])

# Split the dataset into training, validation, and test sets

X_train_3, X_temp_3, y_train_3, y_temp_3 = train_test_split(X_padded_3, y_padded_3,

test_size=0.3, random_state=42)

X_val_3, X_test_3, y_val_3, y_test_3 = train_test_split(X_temp_3, y_temp_3,

test_size=0.5, random_state=42)

Training sample:

(input) A__lication >>> [[ 0 26 26 11 8 2 0 19 8 14 13 ]] (output) p >>> 15

Model

We will use Keras and TensorFlow to create deep learning model. Let’s now see the overall architecture of model utilized here:

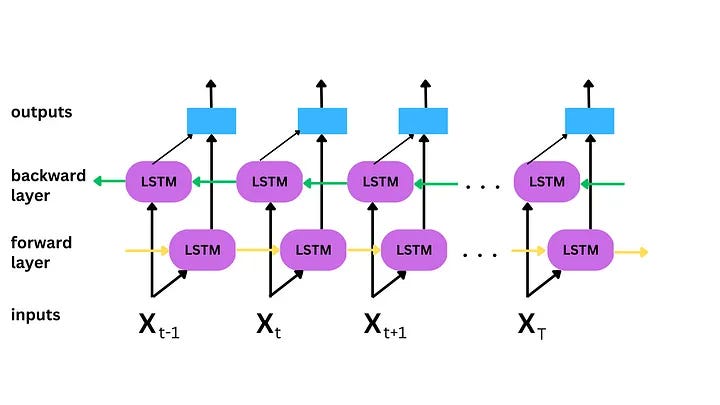

As we can see, input tensor is first fed into embedding layer which then passes through three layers of Bidirectional LSTM with dropout layers followed by attention layers and at last dense layer with soft max activation which will give us array of probabilities for each alphabetical character.

We utilize Keras features of check points and early stopping to find out the optimal model based on validation set loss score.

Below is the script for training the deep learning model and continuously tracking its performance on validation set.

code

import tensorflow as tf

from tensorflow.keras.models import Sequential, Model

from tensorflow.keras.layers import Embedding, Bidirectional, LSTM, Dense, Dropout, Layer

import keras

from tensorflow.keras.callbacks import ModelCheckpoint, EarlyStopping

# Define a checkpoint callback

checkpoint_callback_6 = ModelCheckpoint('best_model_6.keras',

monitor='val_loss',

save_best_only=True,

mode='min',

verbose=1)

# Define an early stopping callback

early_stopping_callback = EarlyStopping(monitor='val_loss',

patience=3, # Stop training if no improvement after 3 epochs

mode='min', # Minimize validation loss

verbose=1) # Print messages about early stopping

# Define parameters

vocab_size = len(char_to_index) # Size of vocabulary (unique characters)

embedding_dim = 50 # Dimension of character embeddings

lstm_units = 256 # Number of units in LSTM layers

num_layers = 3 # Number of BiLSTM layers

dropout_rate = 0.1 # Dropout rate

# Custom Attention Layer

@keras.utils.register_keras_serializable()

class AttentionLayer(Layer):

def __init__(self, **kwargs):

super(AttentionLayer, self).__init__(**kwargs)

def build(self, input_shape):

self.W = self.add_weight(name="att_weight", shape=(input_shape[-1], 1),

initializer="normal")

self.b = self.add_weight(name="att_bias", shape=(input_shape[1], 1),

initializer="zeros")

super(AttentionLayer, self).build(input_shape)

def call(self, x):

et = tf.squeeze(tf.tanh(tf.matmul(x, self.W) + self.b), axis=-1)

at = tf.nn.softmax(et)

at = tf.expand_dims(at, axis=-1)

output = x * at

return tf.reduce_sum(output, axis=1)

# Define Sequential model

model_6 = Sequential()

# Add layers to the model

model_6.add(Embedding(input_dim=vocab_size, output_dim=embedding_dim, input_length=max_length_3))

# Add BiLSTM layers with Dropout

for _ in range(num_layers):

model_6.add(Bidirectional(LSTM(units=lstm_units, return_sequences=True)))

model_6.add(Dropout(rate=dropout_rate))

# Add Attention Layer

model_6.add(AttentionLayer())

# Output layer

model_6.add(Dense(units=vocab_size, activation='softmax'))

# Compile the model

model_6.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

# Print model summary

model_6.summary()

model_6.fit( X_train_3,

y_train_3,

epochs=3,

batch_size=256,

validation_data=(X_val_3, y_val_3),

verbose=1,callbacks=[checkpoint_callback_6,early_stopping_callback])Why this architecture?

BiLSTM — It is a type of RNN (recurrent neural network) and commonly used for sequential data such as time series or NLP problems. In our case, even characters in a word has sequential dependency and has past and future contexts.

Why not LSTM — LSTM would have provided us with context on the past characters in a sequence which is not entirely sufficient this case since even the characters at the end of the word can provide context about the characters at the beginning of a word.

Embedding Layer — Embedding layer is utilized to learn semantic relation ships between characters and generalize the nuance context of input characters.

Attention Layer — Attention layer is used to put more emphasis on characters which add more context to the overall word for example if a word has Q in it — it should be weighted high since there are only a few words with Q in it and its position and weightage would add a lot of power to overall prediction capability of the model.

Dropout Layer — Drop out layer is required for regularization and hence avoid overfitting of the model.

Once we know the number of epochs around which we get our best performing model in terms of minimizing validation set loss. We can then train the model on whole data (train + validation + test) to make it comprehensive and be able to handle any new word.

Conclusion and Model Performance

After perfoming multiple experiments to choose optimum number of BiLSTM units & layers, dropout %age, embedding layer size, number of epochs — we find out our best performing model. Below is the performance of this model on validation and test sets.

Our model performance very good on validation and test sets.

Future improvements

We could further improve this model by leveraging more advanced techniques such as Transformers or pre-trained models such as BERT etc.

If you liked the explanation , follow me for more! Feel free to leave your comments if you have any queries or suggestions.

You can also check out other articles written around data science, computing on medium. If you like my work and want to contribute to my journey, you cal always buy me a coffee :)

Reference

[1] Github link to the notebook: https://github.com/girish9851/BiLSTM-with-Attention-Missing-Characters-in-a-Word/blob/main/bilstm-attention-hangman-625_score_full_train-Copy1.ipynb

[2] Smile twitter emotion dataset: https://github.com/methi1999/hangman/blob/master/dataset/250k.txt

[3] BiLSTM : https://www.youtube.com/watch?v=_bt9OaavkT4

[4] BiLSTM vs LSTM : https://medium.com/@souro400.nath/why-is-bilstm-better-than-lstm-a7eb0090c1e4

Comments

Post a Comment